Foreground/background segmentation using imager

Simon Barthelmé (GIPSA-lab, CNRS)

Foreground-background separation is a segmentation task, where the goal is to split the image into foreground and background. In semi-interactive settings, the user marks some pixels as “foreground”, a few others as “background”, and it’s up to the algorithm to classify the rest of the pixels.

1 K-nearest neighbour approach

The first approach is similar to the SIOX algorithm implemented in the Gimp. It assumes that foreground and background have different colours, and models the segmentation task as a (supervised) classification problem, where the user has provided examples of foreground pixels, examples of background pixels, and we need to classify the rest of the pixels according to colour. Since we do not have a parametric model in mind, a simple and robust approach is to use k-nearest neighbour classification.

There are many implementations of the kNN algorithm available for R but the fastest one I’ve found is in the nabor package. The following function implements (binary) knn classification:

##X is training data

##Xp is test data

##cl: labels for the rows of X

##k = number of neighbours

##Returns average value of k nearest neighbours

fknn <- function(X,Xp,cl,k=1)

{

out <- nabor::knn(X,Xp,k=k)

cl[as.vector(out$nn.idx)] %>% matrix(dim(out$nn.idx)) %>% rowMeans

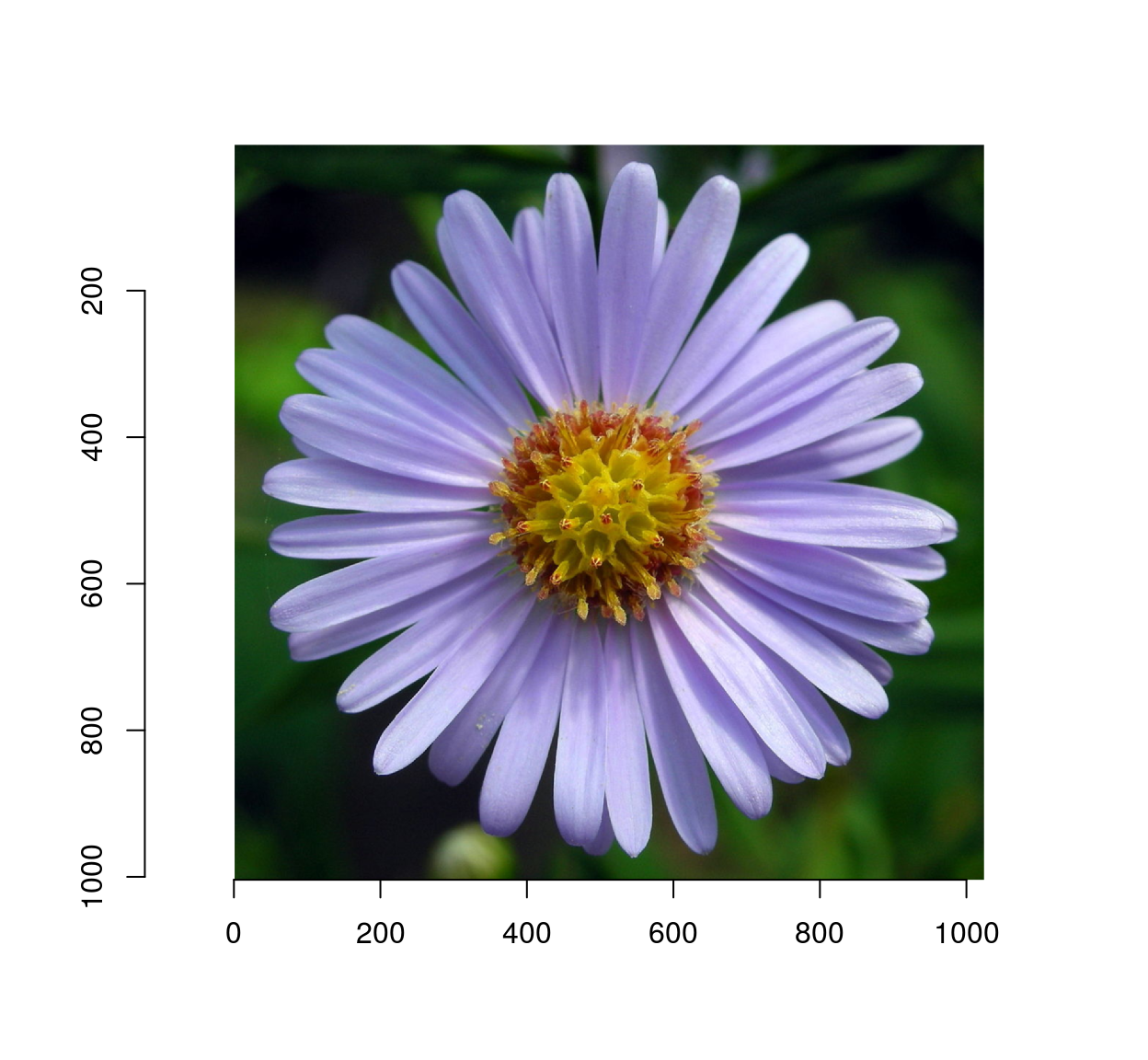

}The picture we’ll use comes from Wikipedia:

im <- load.image("https://upload.wikimedia.org/wikipedia/commons/thumb/f/fd/Aster_Tataricus.JPG/1024px-Aster_Tataricus.JPG")

plot(im)

I hand-selected background and foreground regions (you can do so as well using grabRect):

#Coordinates of a foreground rectangle (x0,y0,x1,y1)

fg <- c(510,521,670,671 )

#Background

bg <- c(791, 28, 1020, 194 )

#Corresponding pixel sets

px.fg <- ((Xc(im) %inr% fg[c(1,3)]) & (Yc(im) %inr% fg[c(2,4)]))

px.bg <- ((Xc(im) %inr% bg[c(1,3)]) & (Yc(im) %inr% bg[c(2,4)]))

plot(im)

highlight(px.fg)

highlight(px.bg,col="blue")

Next we form our training and test data. These need to be triplets of colour values, and for such purposes the CIELAB colour space works well.

im.lab <- sRGBtoLab(im)

#Reshape image data into matrix with 3 columns

cvt.mat <- function(px) matrix(im.lab[px],sum(px)/3,3)

fgMat <- cvt.mat(px.fg)

bgMat <- cvt.mat(px.bg)

labels <- c(rep(1,nrow(fgMat)),rep(0,nrow(bgMat)))For the test data we’ll use all the pixels in the image. It’s redundant (some of those pixels are already categorised) but saves us some reshaping later on:

testMat <- cvt.mat(px.all(im))We’re now ready to run the knn algorithm:

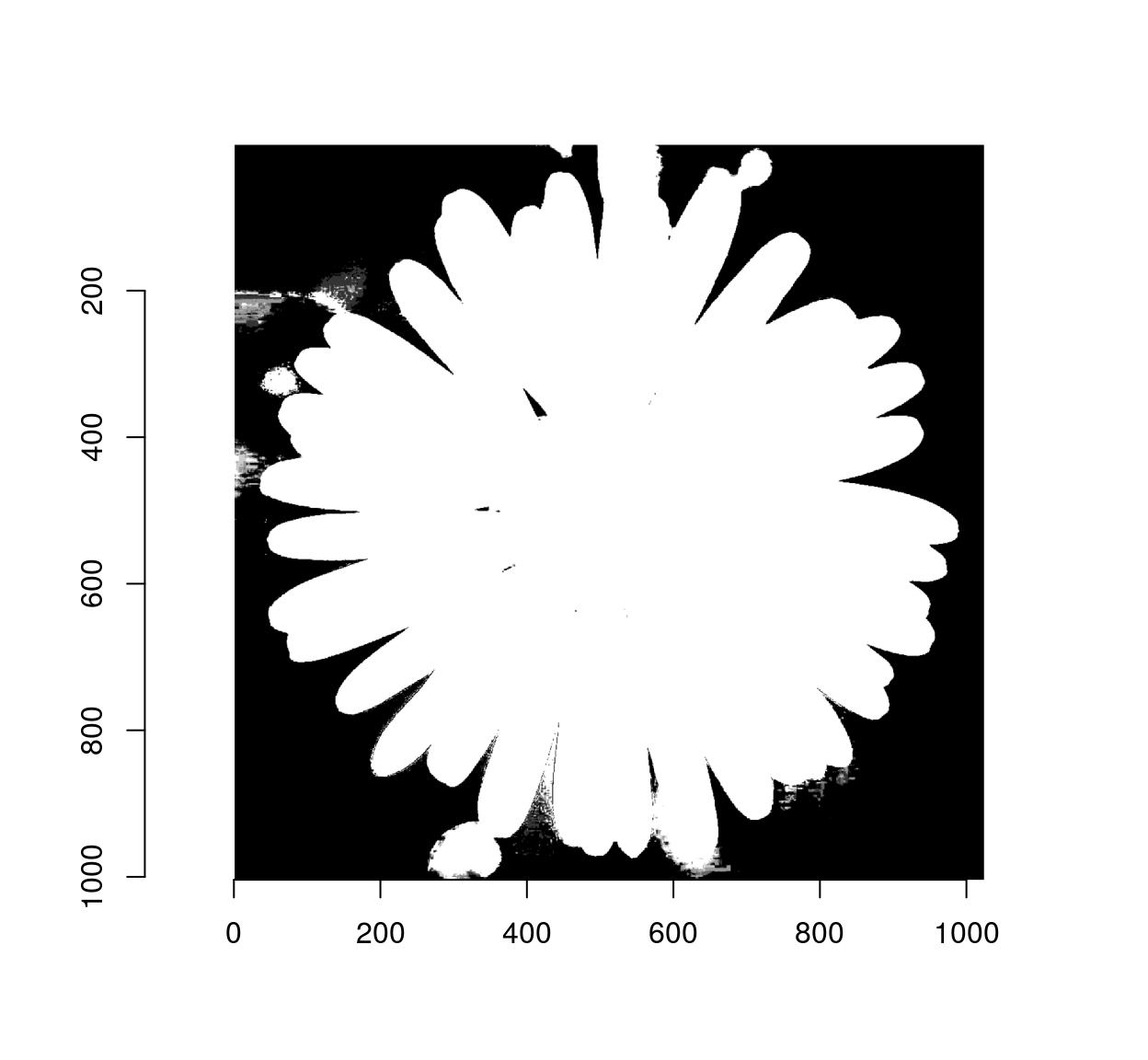

out <- fknn(rbind(fgMat,bgMat),testMat,cl=labels,k=5)out gives the proportion of foreground pixels among the k-nearest neighbours: it works as a confidence measure. We reshape the output into a mask:

msk <- as.cimg(rep(out,3),dim=dim(im))

plot(msk)

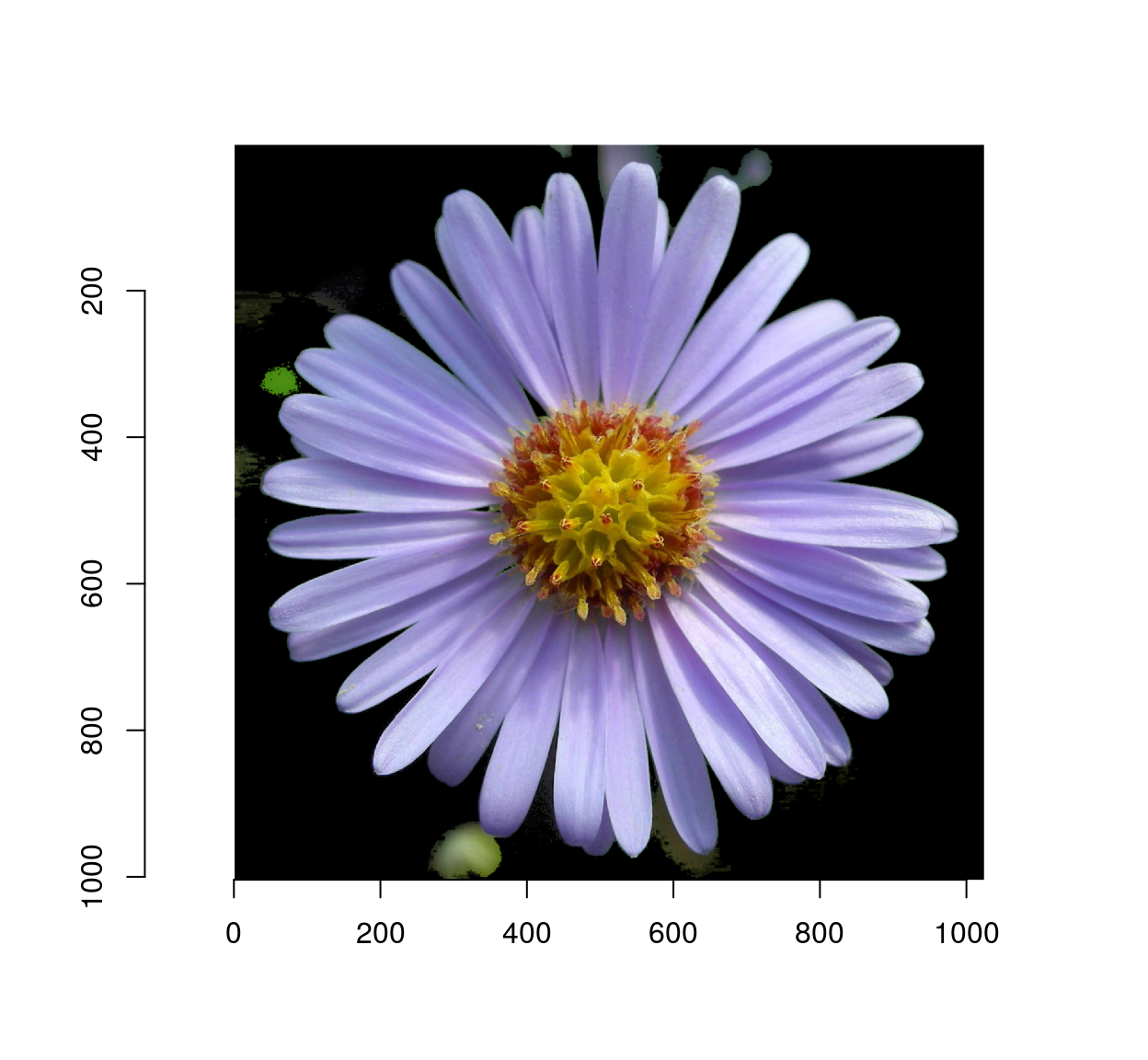

Here’s the final result:

plot(im*msk)

2 Gradient-based algorithm

The idea for this algorithm is borrowed from a blog post by David Tschumperlé. The goal is to select by hand a few points in the foreground of an image, a few points in the background, and let software do the rest.

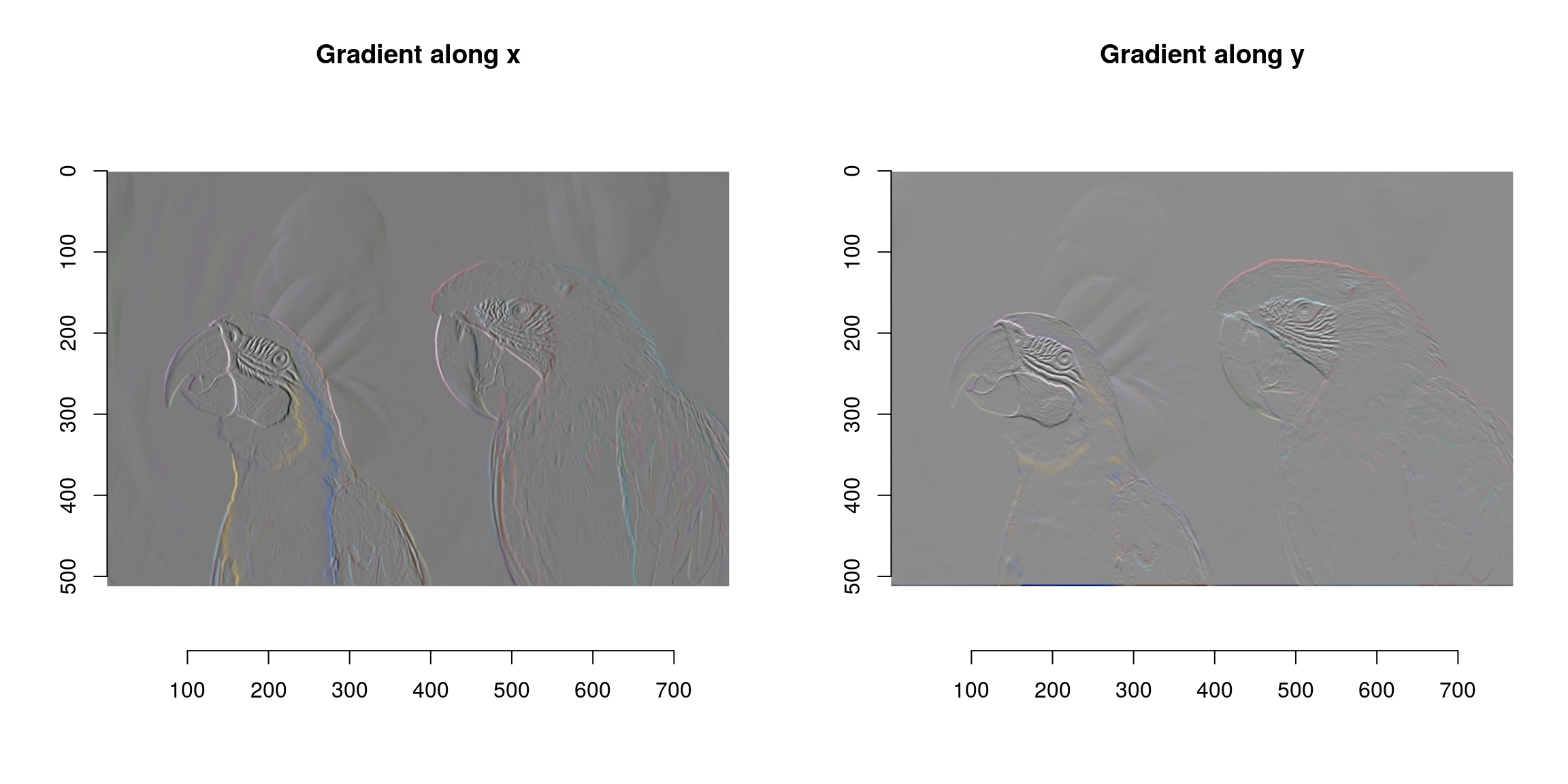

The initial locations (blue and red dots) are called seed points. The way the algorithm works is by spreading region labels outwards from the seed points, but stopping at object boundaries. The first step is to do some edge detection, in order to infer object boundaries. Edges are regions in the image where the luminance changes a lot, so a traditional recipe for edge detection is to use the image gradient:

grad <- imgradient(parrots,"xy")

str(grad)## List of 2

## $ x: cimg [1:768, 1:512, 1, 1:3] 0.00139 0.00612 0.0022 -0.00172 0.00254 ...

## ..- attr(*, "class")= chr [1:3] "cimg" "imager_array" "numeric"

## $ y: cimg [1:768, 1:512, 1, 1:3] 0.01119 0.00923 0.00646 0.00335 0.00287 ...

## ..- attr(*, "class")= chr [1:3] "cimg" "imager_array" "numeric"

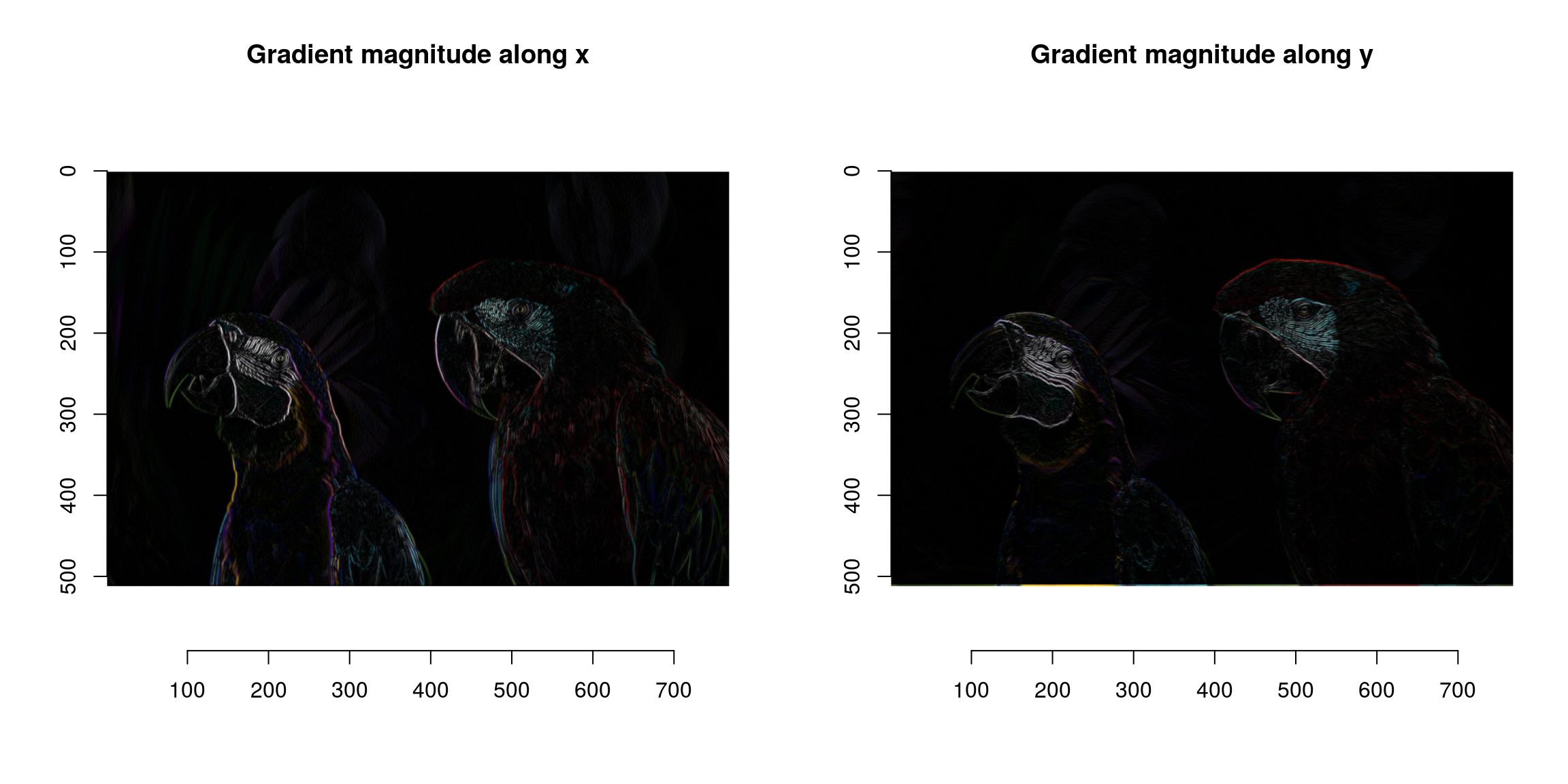

## - attr(*, "class")= chr [1:2] "imlist" "list"layout(t(1:2))

plot(grad$x,main="Gradient along x")

plot(grad$y,main="Gradient along y")

What we now have is a list of two images, one corresponding to the gradient in the x direction and the gradient in the y dimension. Both are computed across the RGB channels separately.

The image gradient isn’t quite what we need yet, since a light-to-dark edge will have negative gradient while a dark-to-light edge will have positive gradient. A good solution is to square the gradient:

grad.sq <- grad %>% map_il(~ .^2)

layout(t(1:2))

plot(sqrt(grad.sq$x),main="Gradient magnitude along x")

plot(sqrt(grad.sq$y),main="Gradient magnitude along y")

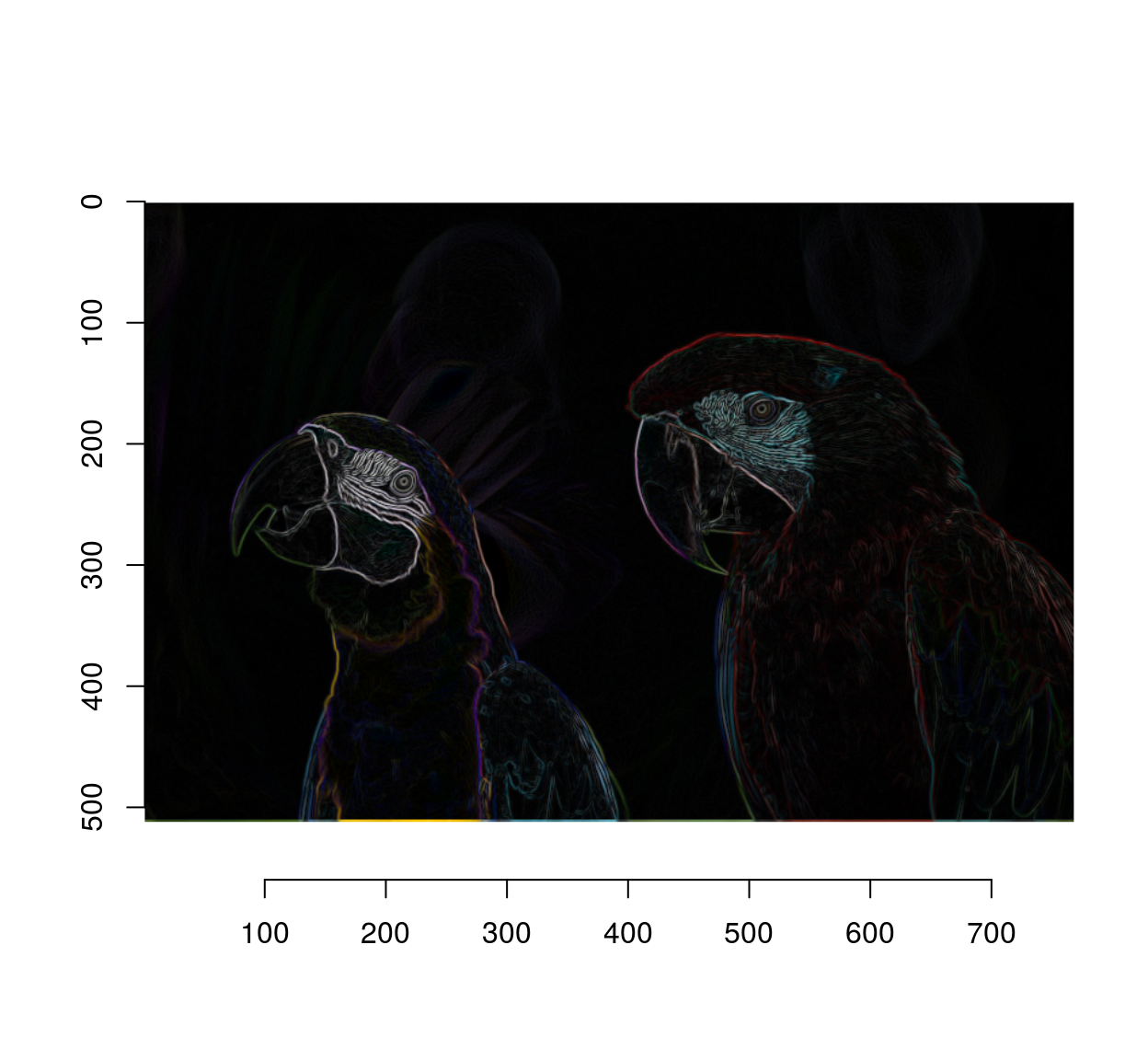

Since we don’t care about the direction of the gradient we can just sum the two:

grad.sq <- add(grad.sq) #Add (d/dx)^2 and (d/dy)^2

plot(sqrt(grad.sq))

and finally we combine all three colour channels by summing:

edges <- imsplit(grad.sq,"c") %>% add

plot(sqrt(edges),main="Detected edges")

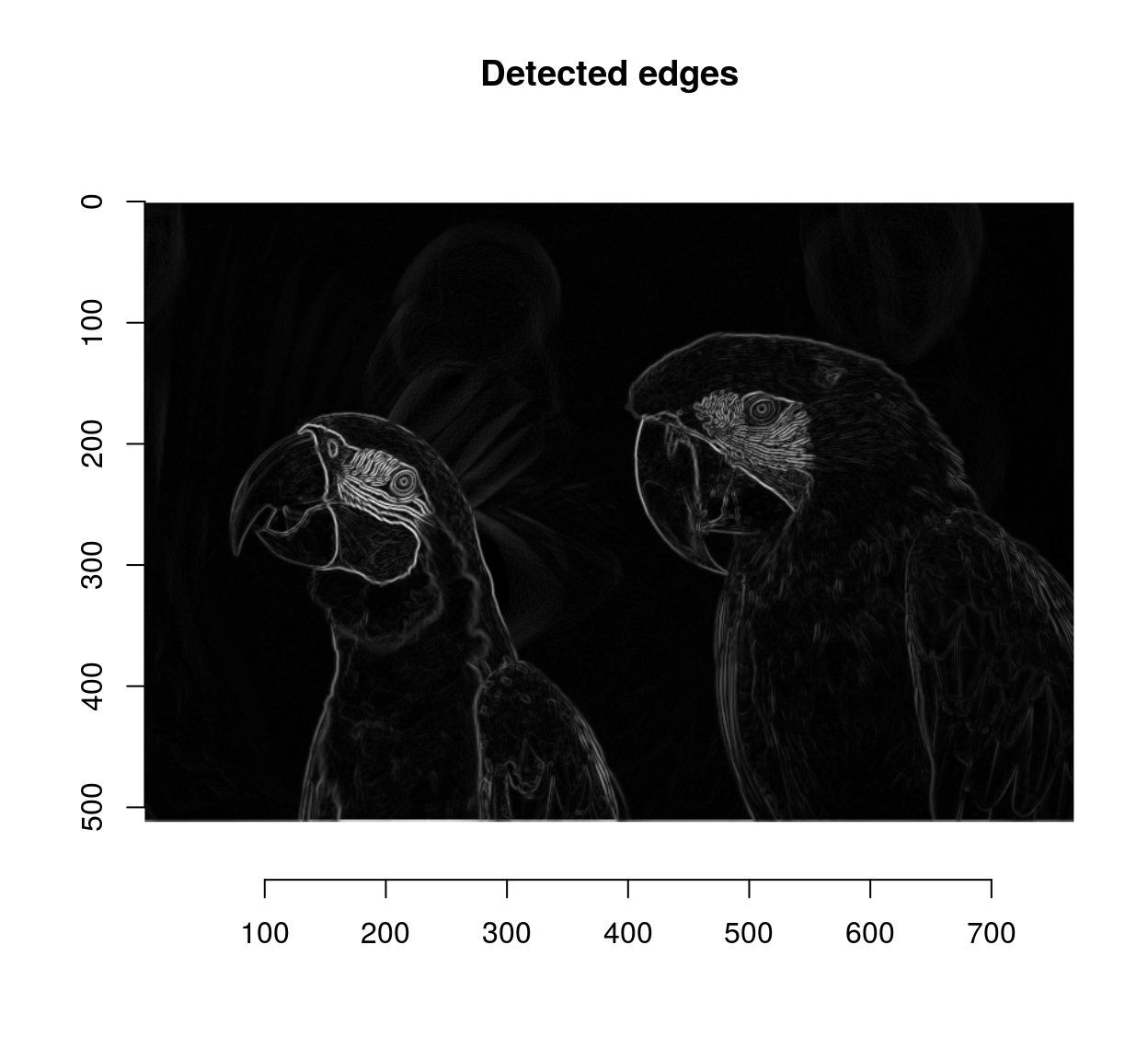

We have too many edges: for example, the edges around the eyes are spurious (they’re not true object boundaries). A way of mitigating the problem is to operate at a lower resolution. I’ll wrap everything into a function

#Sigma is the size of the blur window.

detect.edges <- function(im,sigma=1)

{

isoblur(im,sigma) %>% imgradient("xy") %>% enorm %>% imsplit("c") %>% add

}

edges <- detect.edges(parrots,2) %>% sqrt

plot(edges)

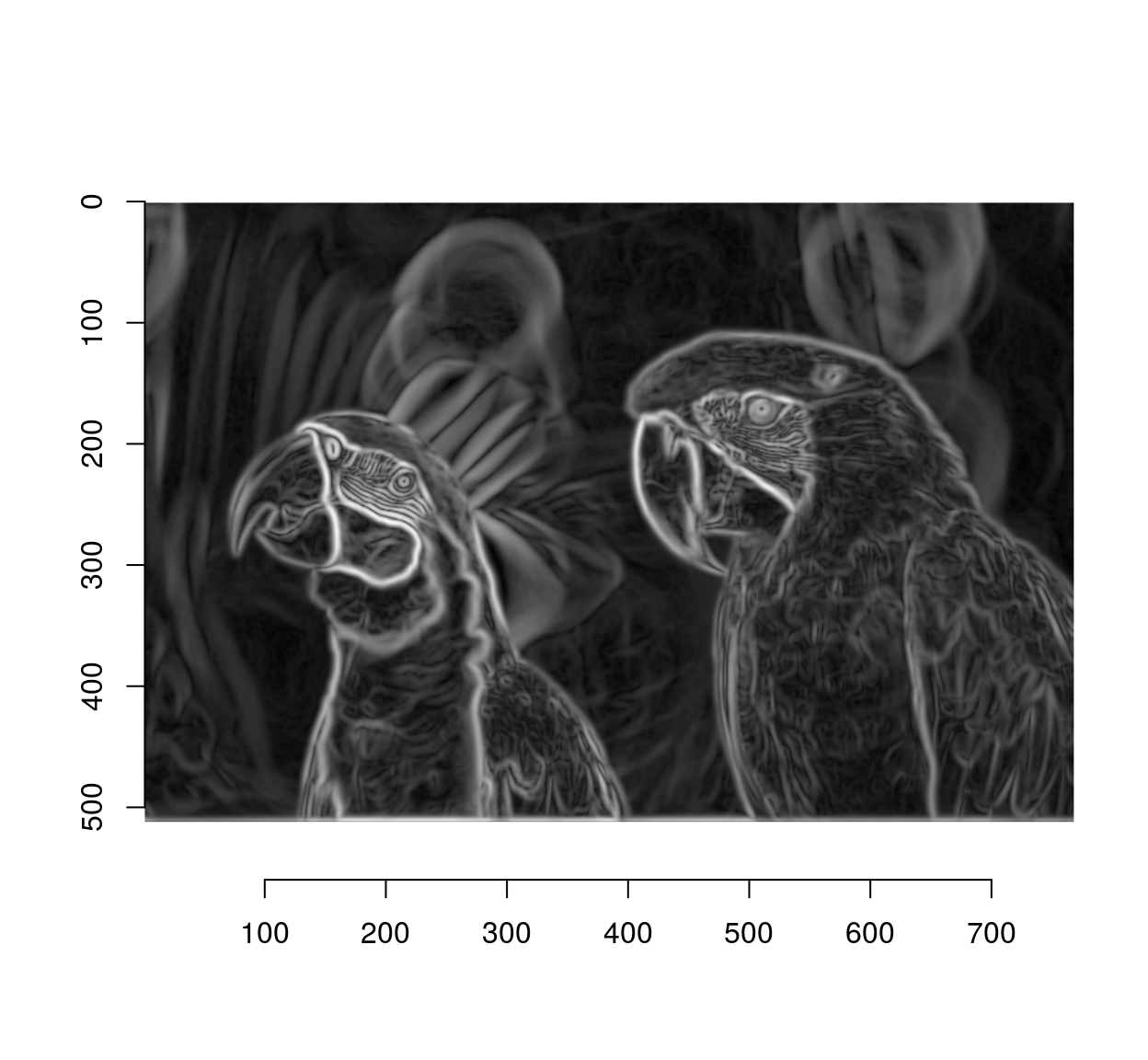

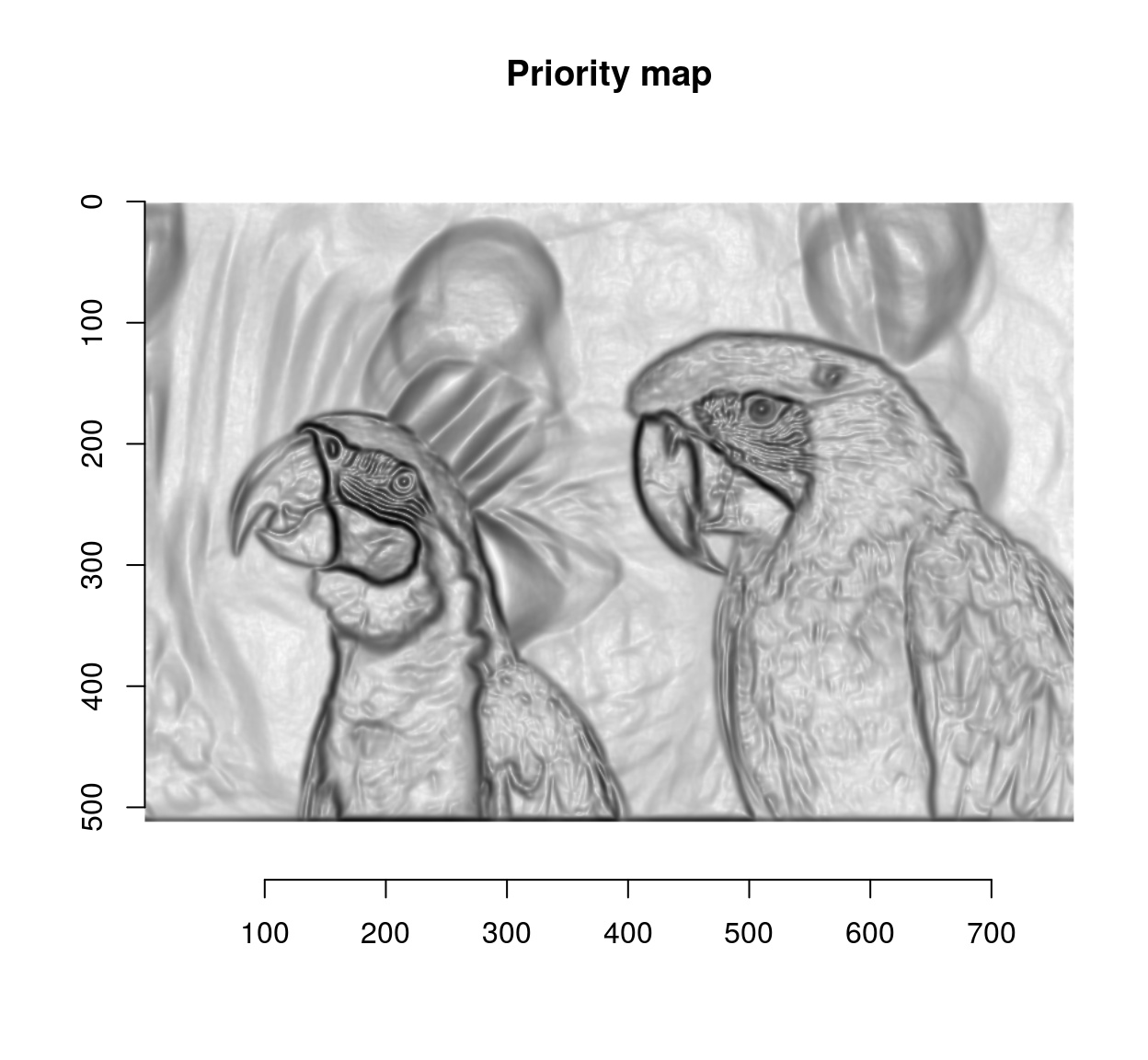

The next step is to use the watershed algorithm for label propagation. The watershed algorithm propagates labels (non-zero pixels) to their non-labelled (zero-valued) neighbours according to a priority map. Labels get propagated first to neighbours with high priority. Here we’re going to give higher priority to non-edge neighbouts. David Tschumperlé uses the following heuristic:

pmap <- 1/(1+edges) #Priority inv. proportional to gradient magnitude

plot(pmap,main="Priority map") #Nice metal plate effect!

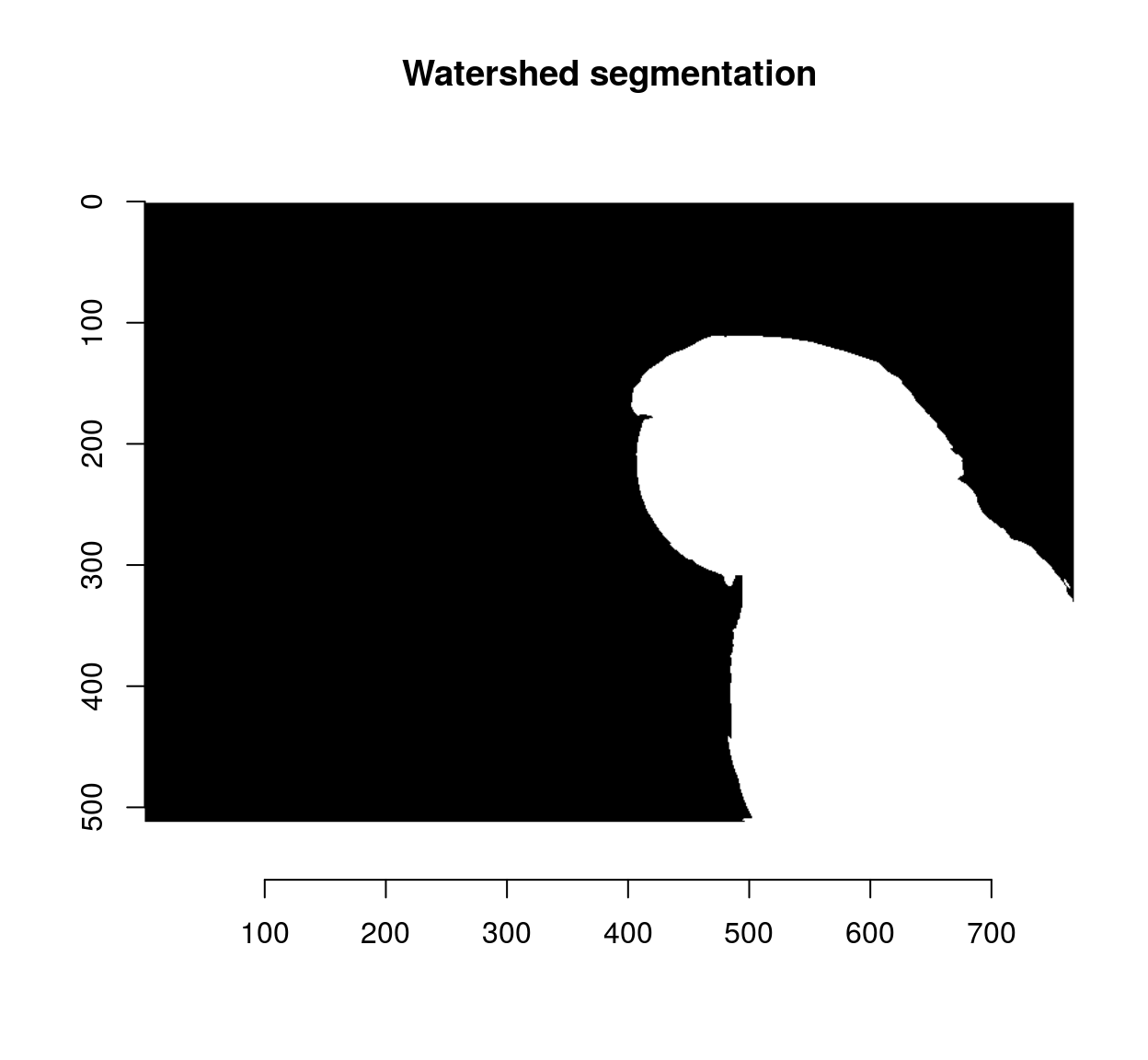

The other element needed for the watershed transform is an image of the same size as pmap, with a few labelled (non-zero) pixels. We’ll start with two:

seeds <- imfill(dim=dim(pmap)) #Empty image

seeds[400,50,1,1] <- 1 #Background pixel

seeds[600,450,1,1] <- 2 #Foreground pixeland we can now run the watershed transform:

wt <- watershed(seeds,pmap)

plot(wt,main="Watershed segmentation")

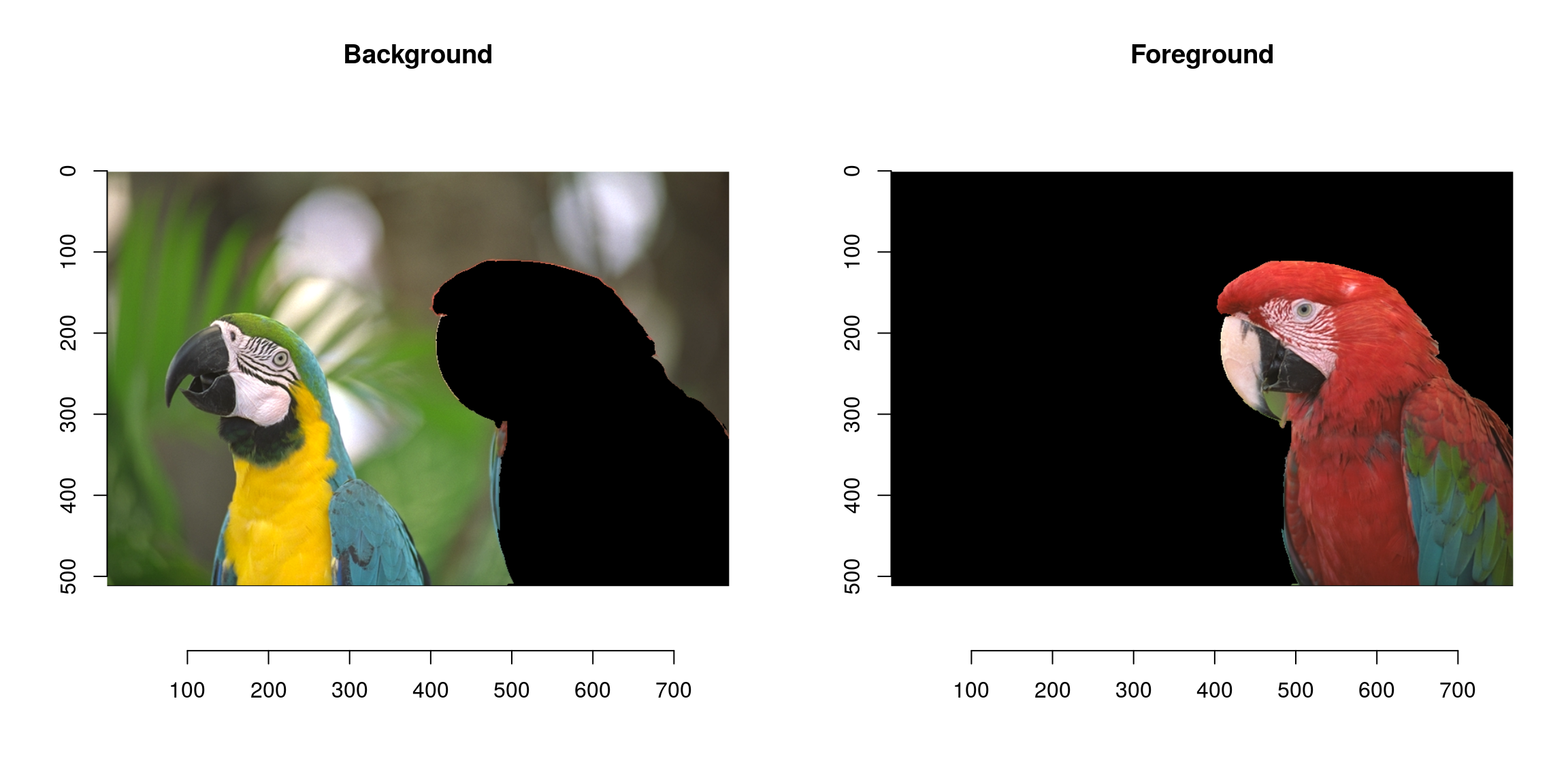

We can use the result as a mask:

mask <- add.colour(wt) #We copy along the three colour channels

layout(t(1:2))

plot(parrots*(mask==1),main="Background")

plot(parrots*(mask==2),main="Foreground")

Not perfect but pretty good already!